Written by Adrian Chow with contributions from Jonathan Yuen and Wintersoldier

Executive Summary:

- Oracles play an integral role in securing value locked in DeFi protocols, they help secure 33B out of the 50B total value locked in DeFi.

- However, the latency inherent in oracle price updates lead to value extraction in a subset of MEV known as Oracle Extractable Value (OEV). This consists of oracle frontrunning, arbitrage, and inefficient liquidations.

- There are an increasing number of implementation designs to prevent or mitigate the negative externalities of OEV, each come with their distinct tradeoffs. We discuss various existing design choices and their tradeoffs, and propose two nascent ideas, their value proposition, open questions, and development bottlenecks.

Introduction

Oracles are arguably one of the most important pieces of infrastructure in DeFi today. They are an integral component in most DeFi protocols, which rely on price feeds to settle derivative contracts, liquidate undercollateralized positions, and much more. Oracles secure ~33 billion in value today, which represents over two thirds of the 50 billion total value locked on-chain1. However, for application developers, integrating oracles comes with distinct design tradeoffs and challenges that have affect value leakage due to the likes of front-running, arbitrage, and inefficient liquidations. In this article, we taxonomize this value leakage as “Oracle Extractable Value” (OEV), provide an overview of its key issues from an application’s perspective, and seek to build upon industry research illustrating key considerations for integrating oracles in a safe and secure manner in DeFi protocols.

Oracle Extractable Value (OEV)

This section will assume basic familiarity with oracle functionality, and the differences between push-based and pull-based oracles. Individual oracles may differ in price feed For an overview, taxonomy, and definitions, see appendix.

A majority of applications that utilize oracle price feeds need prices for read-only purposes: dexes that run their own pricing models use oracle feeds as a reference price, depositing collateral for an overcollateralized loan position would only require oracles to read prices to determine initial parameters like LTV liquidation price, where the latency in their price updates are not crucial when considering designing its system besides in more extreme cases such as long tail assets with overly infrequent pricing updates. As such, oracle considerations can be boiled down to an evaluation of the accuracy of the price contributors and the decentralization properties that come with that oracle provider.

However, in situations where the latency of price feed updates is an important factor to consider, more thought should be put into how the oracle interacts with the application. Usually in these cases, such latencies can create value extracting opportunities, namely frontrunning, arbitrage, and liquidations. This subset of MEV has been coined “Oracle Extractable Value” (OEV)2. Here we outline various forms of extractable value caused before a discussion on various implementations and their tradeoffs.

Arbitrage

Oracle frontrunning and arbitrage is colloquially known as toxic flow in derivative protocols, as these are transactions made with asymmetric information and lead to risk free profits, often at the cost of liquidity providers. OG DeFi protocols such as Synthetix have been combating this issue since 20183, and over time have experimented with various solutions in place to mitigate these negative externalities.

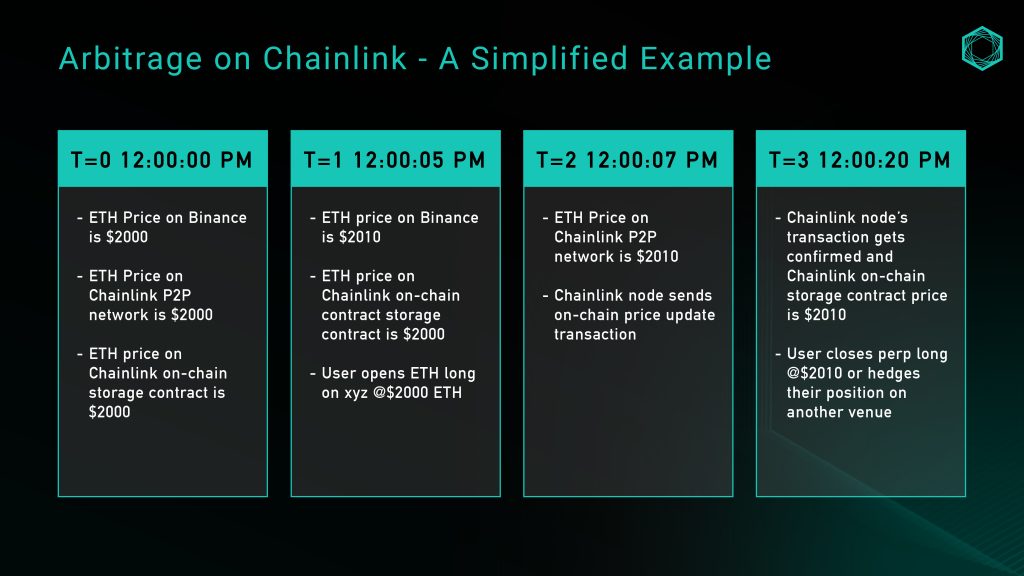

To illustrate, let’s consider a simple example with an ETH/USD price feed on perp dex xyz, which uses Chainlink oracles for the ETH/USD market:

While the example above is overly simplistic and doesn’t consider factors like slippage, fees, or funding, it illustrates the the opportunities created by a lack of price granularity resulting from deviation threshold-based roles. A searcher would be able to monitor the latency in price updates on spot markets against the Chainlink on-chain storage and extract risk-free value from LPs as a result.

Frontrunning

Frontrunning, similar to arbitrage, is another form of value extraction where searchers monitor the mempool for oracle updates and frontrun the actual market prices before they are being committed on-chain. This gives searchers the time to bid for their own transaction to be ordered before the oracle update so they are able to guarantee their trade gets placed at a favorable price before the oracle updates the newest mark price in favor of their trade direction.

Perp dexes such as GMX have been a victim to such Toxic frontrunning which have cut into an estimate of 10% of protocol profits before an update which relayed all GMX oracle updates through the KeeperDAO coordination protocol 4

But what if we just use a pull based model?

One of Pyth’s value propositions is that Pythnet, using Solana’s architecture, can maintain a low latency price feed where publishers push price updates on the network every 300ms5. Hence when an application queries Pyth prices through their API they can retrieve the latest price, update it onto the destination chain’s on-chain storage, and execute whatever downstream actions within the application’s logic in one transaction.

If applications are able to directly query Pythnet for the latest price update, update the on-chain storage, AND settle all related logic in one transaction, wouldn’t that effectively solve frontrunning and arbitrage?

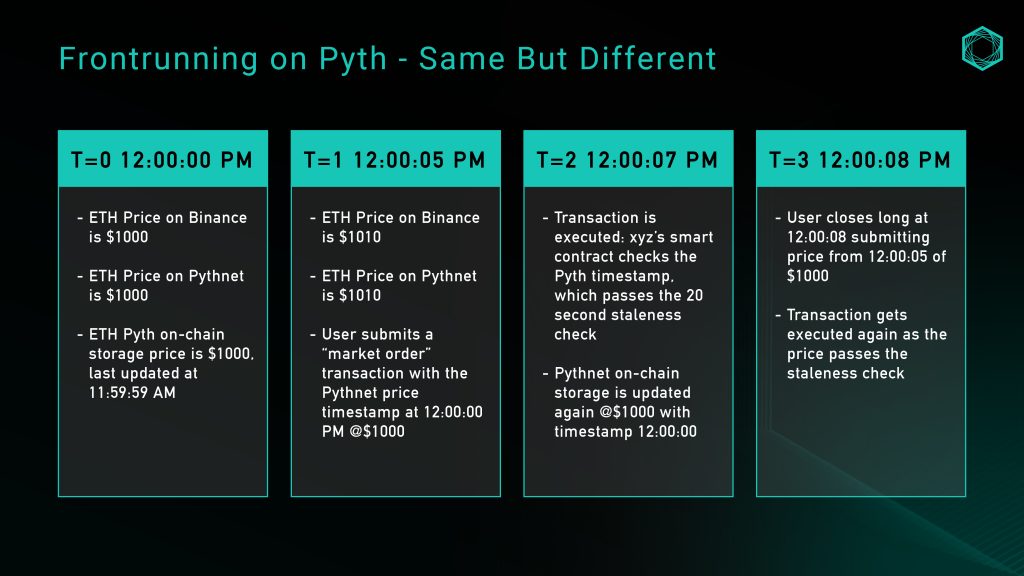

Not exactly – Pyth updates give users some ability to select which prices can be used in a transaction, which can lead to adversarial selection (another fancy way of referring to toxic flow). While the on-chain storage price must move forward in time, users can still choose any price that satisfies these constraints – this means arbitrage still exists by allowing searchers to see prices in the future before using a price from the past. Pyth’s docs 6suggest that a simple way to guard against this attack vector is by incorporating a staleness check, to ensure that the price is sufficiently recent – but some buffer must be given for the update transaction to be included in the next block, so how do we determine what time threshold is optimal?

Let’s consider the same example above with perp dex xyz, except this time they are using the Pyth ETH/USD price feed, with a staleness check of 20 seconds, meaning the Pyth price’s timestamp must be within 20 seconds of the block timestamp where the downstream transaction is executed:

An intuitive idea would be to simply lower the staleness check threshold, but a lower threshold might run into reverts for networks with unpredictable blocktimes which results in a tradeoff in user experience. As Pyth’s price feeds are bridge reliant, an adequate buffer is required to a) provide time for Wormhole guardians to attest prices and b) allow for the destination chains to process the transaction and include in the block. More on these tradeoffs in the following section.

Liquidations

Liquidations are a core component of any protocol that involves leverage, and the granularity of price feed updates play a crucial role in determining the efficiency of liquidations.

For threshold-based push oracles, the granularity (or lack thereof) of price updates can lead to missed liquidation opportunities when spot prices have reached a threshold but did not meet parameters preset by the oracle price feed. This in itself introduces negative externalities in the form of market inefficiencies.

In the event a liquidation event happens, applications usually pay out a portion of liquidated collateral, and sometimes plus some incentives to liquidators. Aave, for instance, paid out $37.9 million in incentives for liquidations in 2022 on mainnet alone7. This is vastly overcompensating third parties and providing poor execution to users. Additionally, the gas wars that emerge when there’s value to be extracted leaks from the application and instead goes up the MEV supply chain.

Design Space and Considerations

With issues above in mind, the following section will discuss various design implementations for push-based, pull-based, and alternative designs, their effectiveness in combating said issues, and tradeoffs they introduce which either come in the form of additional centralisation and trust assumptions or dampened user experience.

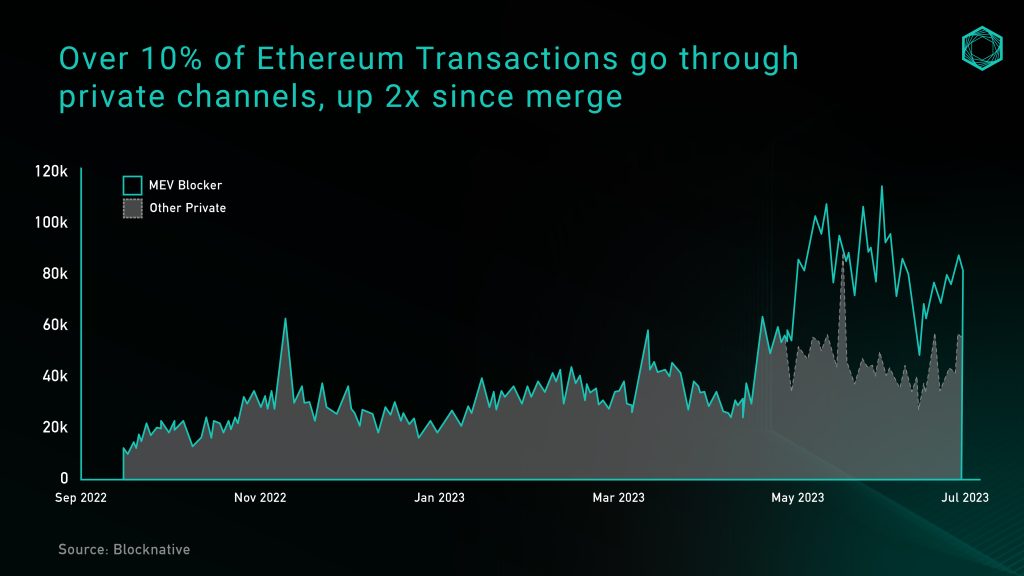

Oracle-specific Order Flow Auctions (OFA)

OFAs have emerged as a solution to combat negative externalities generated by MEV. At a high level, an OFA serves as a generalized third-party auction service where users can send their orders (transactions or intents) to, and MEV-extracting searchers bid for exclusive rights to run strategies to their orders. A significant portion of auction proceeds are then returned back to the user to compensate them for the value created within these orders. OFAs have seen a surge in adoption recently, with greater than 10% of all Ethereum transactions going through a private channel (private RPCs/OFAs) (figure 3) and many catalysts for further growth.

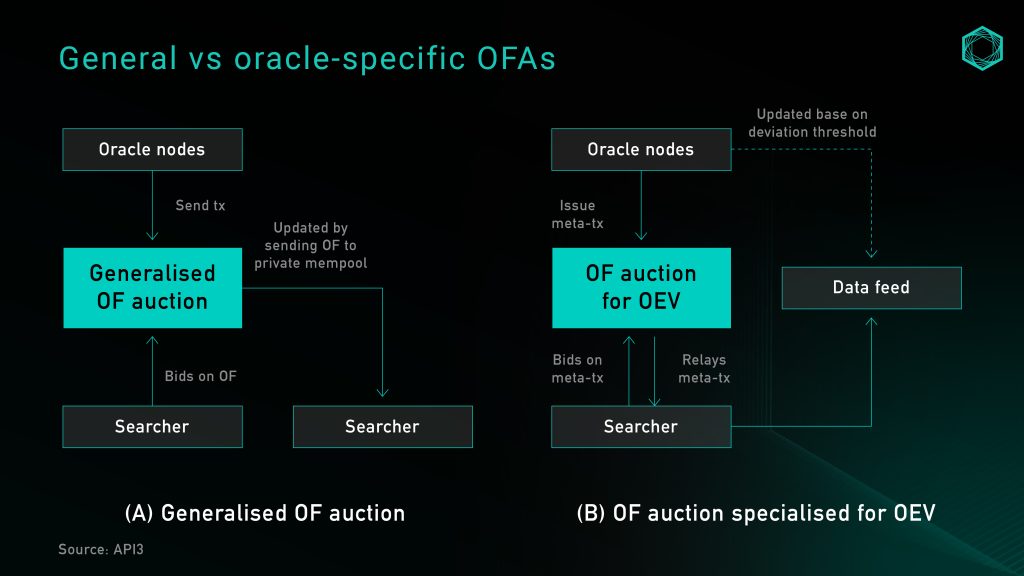

The issue with implementing generalized OFAs in the context of oracle updates is that oracles won’t have a picture of whether a standard rule-based update will generate any OEV or not, and if not – introduces added latency when oracles send transactions into the auction. On the other hand of this spectrum, the most simplistic way to streamline OEV and minimize latency is to offer all oracle order flow to a single dominant searcher. However, this obviously introduces significant centralisation risk and could encourage rent extraction, censorship, and leads to poor user experience.

An oracle-specific OFA auctions price updates that exclude existing rule-based updates, which still through the public mempool. This allows for oracle price updates, and any extractable value that accompany it, to be retained within the application layer. As a byproduct, it also increases the granularity of the data, by allowing searchers to request datafeed updates without oracle nodes bearing the additional costs of more frequent updates.

Oracle-specific OFAs are ideal for liquidations, as it leads to more granular price updates, maximizes capital returned to liquidated borrowers, reduces protocol rewards required to be paid out to liquidators, and retains value extracted from bidders within the protocol to redistribute back to users. They also address frontrunning and arbitrage to an extent, though not fully. Under perfect competition and a first-price-sealed-bid auction process, auctions should result in the value extracted from frontrunning OEV data feeds approaching the cost of blockspace for executing the opportunity 8, and that the increased granularity of price feed updates will reduce the amount of arbitrage opportunities created.

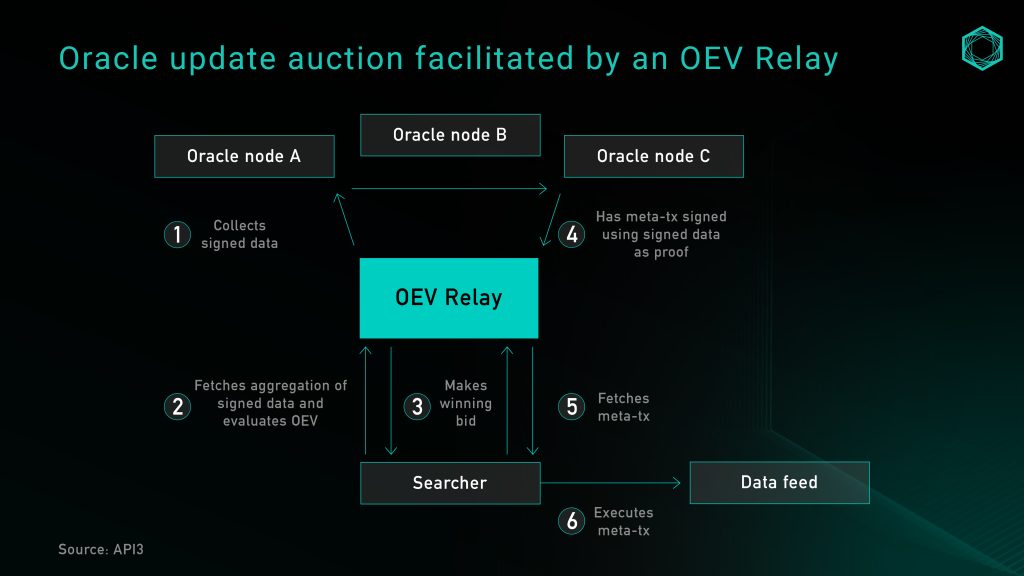

Currently, to implement an oracle-specific OFA would require either integrating with a third party auction service like OEV-Share, or to build an auction service as a part of the application. API3 utilizes an OEV relay (figure 5), inspired by Flashbots, as an API that implements DoS protection service to conduct auctions. The relay is responsible for collecting meta transactions from oracles, curating and aggregating searcher bids, and redistributes proceeds in a trustless manner without ever having control over bids. When a searcher wins, they can only update a datafeed by transferring the bid amount to a protocol-owned proxy contract, which then updates the price feed with the signed data provided by the relay.

Alternatively, protocols can forgo a middleman and build their own auction service to capture all value extracted from OEV. BBOX is one upcoming protocol looking to embed auctions into their liquidation mechanism to capture and return OEV back to the application and its users. 9

Running a Centralized Node or Keeper

An earlier idea to combat OEV by the first wave of oracle-based perpetual dexes is by running a centralized keeper network and aggregating prices received from third party sources like centralized exchanges, then utilizing something like Chainlink data feeds as a fallback or circuit breaker. This model was popularized by GMX v110 and many of its subsequent forks, and its main value proposition lies in absolute prevention of frontrunning due to the keeper network being run by a single operator.

While this addresses many issues outlined above, it has obvious centralisation concerns. A centralized keeper system can determine the execution price without proper verification of the sources and aggregation methodology of pricing. In GMX v1’s case, the keeper is not an on-chain or transparent mechanism, rather a procedure signed by a team address running on a centralized server. The keeper’s core role is not only to execute the order, but to “decide” the trading price based on its own preset definitions with no way to verify the authenticity or source of the execution price used.

Automated Keeper Networks & Chainlink Data Streams

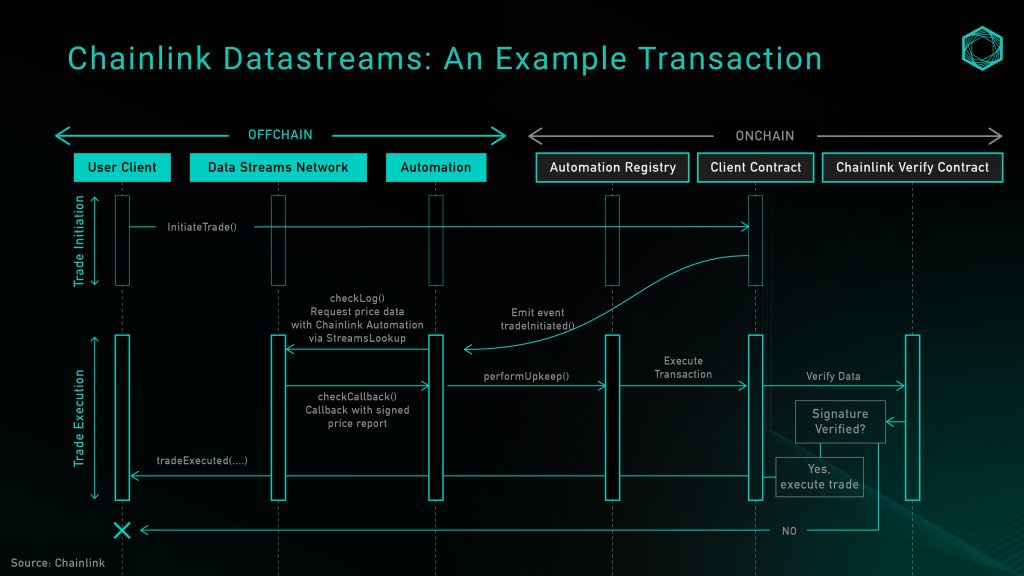

A solution to aforementioned centralization risks introduced by single-operator keeper networks is utilizing a third party service provider for a more decentralized automation network. One such product is Chainlink Automation, which accompanies this service with Chainlink Data Streams, their new pull-based, low latency oracle. The product was just recently announced and currently is in closed beta stage, but a working implementation is already currently being used by GMX v211, which can be used as a reference for what a system incorporating this design looks like.

At a high level, Chainlink Data Streams consists of three main components: a Data DON, an Automation DON, and an on-chain verifier contract12. The Data DON is an offchain data network where its architecture can be analogous to that of how Pythnet maintains and aggregates data. The Automation DON is a keeper network secured by the same node operators of the Data DON and is used to pull prices from the Data DON on-chain. Lastly, the verifier contract is used to verify off chain signatures were correctly signed.

The diagram above illustrates the transaction flow for calling an open trade function, where the Automation DON is responsible for fetching prices from the data DON and updating the on-chain storage. Currently, the endpoints for direct querying of the Data DON is restricted to whitelisted users only, so protocols can choose to offload keeper maintenance to the Automation DON, or run their own keepers. But this is expected to be progressively shifted to a permissionless structure over time in the product development lifecycle.

On a security level, the trust assumptions in relying on the Automation DON is the same as that of using the Data DON alone, which is a significant improvement to a single-keeper design. Relinquishing price feed updating rights to the Automation DON, however, allows for value extraction opportunities to be exclusively given to nodes in the keeper network. This in turn means that the protocol would be trusting Chainlink node operators, primarily institutions, to uphold their social reputation and not frontrun its users, analogous to trusting Lido Node operators to uphold their reputation and not cartelize blockspace given its outsized market share.

Pull-based: Delayed Settlement

One of the biggest changes in Synthetix perps v2 was the introduction of Pyth price feeds for settling perp contracts13. This allowed orders to be settled in either Chainlink or Pyth prices, given that their deviations do not exceed the predefined threshold, and that timestamps pass their staleness check. However, as mentioned above, simply switching to a pull-based oracle does not resolve the protocol of all OEV related problems. As a solution to frontrunning, a “last look” pricing mechanism was introduced in the form of a delayed order. In practice, this breaks up a user’s market order into two components:

- Transaction #1: An ‘intent’ to open a market order is submitted on-chain, with standard order parameters like size, leverage, collateral, and slippage tolerance. This is accompanied with an additional keeper fee that is used to incentivize keepers to execute transaction #2.

- Transaction #2: A keeper picks up the order submitted in #1, requests the latest Pyth price feed, and calls the Synthetix execution contract in 1 transaction. The contract checks predefined parameters like staleness and slippage, if all pass, the order is executed, on-chain price storage is updated, and position is opened. The keeper claims the fee as compensation for gas used and maintaining the network.

Such implementation does not give users the opportunity to adversely select prices to submit on-chain, which effectively addresses front-running and arbitrage opportunities for the protocol. However, this design comes with a tradeoff in user experience: executing this market order is done over the course of two transactions, and the user will be required to subsidize gas fees for the keeper’s execution, alongside the cost for updating the oracle on-chain storage. Previously, this tip was a $2 sUSD fixed fee, and was recently changed to a dynamic fee based on the Optimism gas oracle + a premium, which would vary based on layer 2 activity. Regardless, this solution can be thought of as an improvement to LP profitability at the cost of the trader’s user experience.

Pull-based: Optimistic settlement

With delayed orders introducing an additional network fee for users, which scales proportionately to layer 2 DA fees, we internally brainstormed an alternative order settlement model which could potentially lower user’s cost while at the same time preserve decentralization and protocol security, which we call “optimistic settlement”. This mechanism, as its name suggests, would allow traders to execute market transactions atomically, with the system accepting all prices optimistically with a window for searchers to submit proofs that the order is made with malicious intent. This section outlines a few iterations of the idea, our thought process, and open questions that still remain.

Our initial thinking was a mechanism that involved users submitting a price via parsePriceFeedUpdates upon opening a market order, then allowing the user or any third party to submit a settlement transaction with the price feed data, finalizing the trade with the price at transaction confirmation. At settlement, any negative discrepancy between the two prices will be applied to the user’s PnL as slippage. Advantages of this method include reduced cost burden for users and mitigation of frontrunning risks. Users no longer have to pay an incentive premium for keepers to settle the order, and the frontrunning risk is still contained as the settlement price is not known at the time or order submission. However, this still introduces a two-step settlement process, which is one of the shortcomings we identified in the Synthetix’s delayed settlement model. The additional settlement transaction may be redundant in most cases where volatility during order placement and settlement period doesn’t exceed system defined thresholds for profitable frontrunning.

An alternative solution to circumvent the above is to allow the order to be optimistically accepted by the system, then to open up a permissionless challenge period where a proof can be submitted with evidence that the price deviation between the price timestamp and block timestamp allowed for profitable frontrunning.

Here’s how would work:

- A user creates a market order with the current market price. They then send this price, alongside embedded the pyth price feed bytes data as an order creation transaction

- The smart contract optimistically verifies and stores this information.

- Upon confirmation of the order on-chain, there is a challenge period where searchers can submit proof of adverse selection. This proof would contain proof that the trader had used a stale price feed with intention to arbitrage the system. If a proof is accepted by the system, the difference in value would be applied to the trader’s execution price as slippage, where excess value would be given to the keeper as a reward.

- After the challenge period all prices are considered valid by the system

This model has two advantages: it reduces the cost burden on the user, who only pays the gas fee for order creation and oracle update in the same transaction, and does not require an additional settlement transaction. It also discourages frontrunning and protects the integrity of the LP pool by ensuring that with a healthy keeper network, there would be economic incentives in place to submit proofs to the system that it is being front run.

However, a few open questions remain before this idea can be taken to life:

- Defining ‘adverse selection’: How can the system differentiate between users who submitted stale prices resulting from network latency vs users that intentionally tried to arbitrage the system? An initial idea could be to measure the volatility during the time defined in the staleness check (e.g. 15 seconds), should volatility exceed net execution fee, that order could be labeled as a potential exploit

- Setting an appropriate challenge period: What is an appropriate time window for keepers to challenge prices, given that toxic order flow may only be open for a short amount of time? Batch attestations would likely be more gas efficient, but given the unpredictability of order flow over time, it’d be hard to time batch attestations to ensure all price feeds get attested or ample time to be challenged.

- Economic incentives for keepers: Keepers will have nontrivial gas costs associated with posting proofs, for submitting proofs to be rational for economically incentivized keepers, the reward associated with submitting a winning proof must be greater than gas cost associated with submitting it. With varying order sizes, this might not be a guaranteed assumption.

- Would similar mechanisms need to be put in place for close orders, if so, what user experience tradeoffs might there be?

- Ensuring “unreasonable” slippage is not applied to the user: In a flash crash scenario, a very large price discrepancy between order creation and on-chain confirmation could occur. Some sort of backstop or circuit breaker may be needed, a potential option to consider is using Pyth’s EMA price provided to ensure price feed stability before usage.

ZK Co-processors – an Alternative Form of Data Consumption

Another direction worth the exploration is the usage of ZK co-processors, that are designed to process complex computation off-chain with access to on-chain state, and offers a proof that computation was executed in a way that is permissionlessly verifiable. Projects like Axiom enable contracts to query historical blockchain data and perform computations off-chain and submit a ZK proof that the result was computed correctly from valid on-chain data. Co-processors open the potential for a manipulation resilient custom TWAP oracle built with historical prices of multiple DeFi-native liquidity sources (e.g. Uniswap + Curve).

ZK co-processors will expand the range of data that can be offered to dApps in a secured manner, as compared to current options with traditional oracles where we are only getting the latest asset price data (Pyth does offer EMA price as a way for developers to use as a reference check for the latest price). This way applications can introduce more business logic that works with historical blockchain data to improve protocol security or strengthen user experiences.

However ZK co-processors are still early in their development, and there remains some bottlenecks such as:

- Potential long proof time over large blockchain data fetch and compute on co-processor environment

- Only blockchain data is available, which does not solve the need to communicate with non-web3 apps securely.

Oracle Free Solutions – the Future of DeFi?

An alternative school of thought to this problem is that oracle dependencies in DeFi can be addressed by designing a primitive from the ground up to remove the need for an external price feed. A recent development in this space is the utilization of various AMM LP tokens as a means for pricing, which is based on the core idea that LP positions in constant function market makers are tokens that represent preset weights in two assets and have an automated pricing formula for these two tokens (i.e. xy=k). By leveraging LP tokens (as collateral, loan underlying, or in more recent use cases, moving v3 LP positions around different ticks), the protocol can access information as it would normally require from oracles. Such has enabled a new wave or oracle-free solutions that do not face such challenges as their oracle-dependent counterparts. To put concretely, some examples of applications building in this direction include:

Panoptic is building a perpetual, oracle-free options protocol leveraging Uniswap v3 concentrated liquidity positions. As concentrated liquidity positions get 100% swapped to the base asset when spot prices surpass the upper range of the LP position, the payoff of a liquidity provider closely resembles that of a put option seller. Thus its options marketplace operates on liquidity providers depositing LP assets or positions, and option buyers and sellers borrowing and moving liquidity in and out of ranges to create dynamic option payoffs. As loans are denominated in LP positions, oracles are not needed for settlement.

Infinity Pools is building a liquidation-free, oracle-free leveraged trading platform, also leveraging Uniswap v3 concentrated liquidity positions. LPs on Uniswap v3 can lend out their LP tokens, traders deposit some collateral, borrow the LP token & redeem it for the underlying asset they are directionally trading. The loan upon repayment will either be denominated in the base or quote asset, depending on the price upon repayment, and can be calculated directly from checking the LP composition on Uniswap, removing oracle dependence.

Timeswap is building a fixed-term, liquidation free, oracle-free lending platform. It is a three-sided marketplace with lenders, borrowers, and liquidity providers. It differs from traditional lending markets in that they utilize “time-based” liquidations, as opposed to price-based liquidations.The liquidity providers’ role in the protocol is analogous to a role of a liquidity provider in a traditional AMM. LPs in dexes are automated to always buy from sellers and sell to buyers; whereas in Timeswap LPs will always lend to borrowers, and borrow from lenders, serving a similar role in making markets. They are also responsible for taking on loan defaults, and receive priority on forfeited collateral as compensation.

Conclusion

Pricing data is still an invaluable component to many decentralized applications today, and the increase in oracles’ total value secured over time only continues to affirm its product-market-fit. This article aimed to bring awareness and outline the the challenges around OEV we are currently presented with, and the design space for their implementations for push-based, pull-based, and alternative designs using AMM LPs or off-chain coprocessors.

We are excited to see energetic builders looking to tackle these difficult design challenges. If you are working on something disruptive in this space, we would love to hear from you!

References and Acknowledgements

Thanks to Jonathan Yuen and Wintersoldier for your contributions and conversations that inspired many of the ideas in this article.

Thanks to Erik Lie, Richard Yuen (Hailstone), Marc, Mario Bernardi, Anirudh Suresh (Pyth), Ugur Mersin (API3 DAO), and Mimi (Timeswap), for their valuable comments, feedback and review.

- https://defillama.com/oracles (14 Nov) ↩︎

- OEV Litepaper https://drive.google.com/file/d/1wuSWSI8WY9ChChu2hvRgByJSyQlv_8SO/edit

↩︎ - Frontrunning on Synthetix: A History by Kain Warwick https://blog.synthetix.io/frontrunning-synthetix-a-history/

↩︎ - https://snapshot.org/#/rook.eth/proposal/0x523ea386c3e42c71e18e1f4a143533201083655dc04e6f1a99f1f0b340523c58 ↩︎

- https://docs.pyth.network/documentation/pythnet-price-feeds/on-demand ↩︎

- https://docs.pyth.network/documentation/solana-price-feeds/best-practices#latency ↩︎

- Aave liquidation figures https://dune.com/queries/3247324

↩︎ - https://drive.google.com/file/d/1wuSWSI8WY9ChChu2hvRgByJSyQlv_8SO/edit

↩︎ - https://twitter.com/bboexchange/status/1726801832784318563

↩︎ - https://gmx-io.notion.site/gmx-io/GMX-Technical-Overview-47fc5ed832e243afb9e97e8a4a036353

↩︎ - https://gmxio.substack.com/p/gmx-v2-powered-by-chainlink-data ↩︎

- https://docs.chain.link/data-streams ↩︎

- https://sips.synthetix.io/sips/sip-281/

↩︎

Appendix

Definitions: Push vs Push Oracles

Pushed-based oracles maintain prices off-chain in a P2P network, and nodes update prices on-chain based on a predefined set of rules. Using Chainlink as an example, prices are updated based on two trigger parameters: deviation threshold and heartbeat. The ETH/USD price feed on Ethereum below will be updated whenever off-chain prices have deviated 0.5% from the latest on-chain price, or when the heartbeat timer of 1 hour reaches zero.

In this case, the oracle operator must pay transaction fees for each price update, which is a fundamental tradeoff between cost and scalability. Increasing the number of price feeds, supporting additional blockchains, or pushing more frequent updates all incur additional transaction costs. Consequently, long tail assets with higher trigger parameters will inevitably have less reliable price feeds. The CRV/USD example below illustrates this – as it would require a 1% deviation threshold in order for a new price to be updated on chain, with a heartbeat of 24 hours, meaning if prices don’t deviate by greater than 1% within 24 hours, there will only be one new price update every 24 hours. Intuitively, the lack of price feed granularity for long tail assets will inevitably lead to additional risk considerations for applications to enable markets for these assets, which partially explains why a majority of DeFi activity still surrounds the most liquid, large cap tokens.

Pull-based oracles, in contrast, allow prices to be pulled on-chain on an on-demand basis. Pyth, the most prominent example today, streams price updates off-chain, signing each update such that anyone can verify its authenticity. Aggregated prices provided by publishers (equivalent to Chainlink nodes) are maintained on Pythnet, a private blockchain based on Solana’s codebase. When an update is required, data is bridged via Wormhole, which attests data on Pythnet after which they are made available to be pulled-on chain permissionlessly.

The diagram above describes the architecture of Pyth price feeds: When a price update is needed on-chain, users can request for an update via the Pyth API, where an attested price on Pythnet is sent to the Wormhole contract, which observes and creates and sends a signed VAA that can be verifiable on any blockchain where Pyth’s contracts are deployed on.